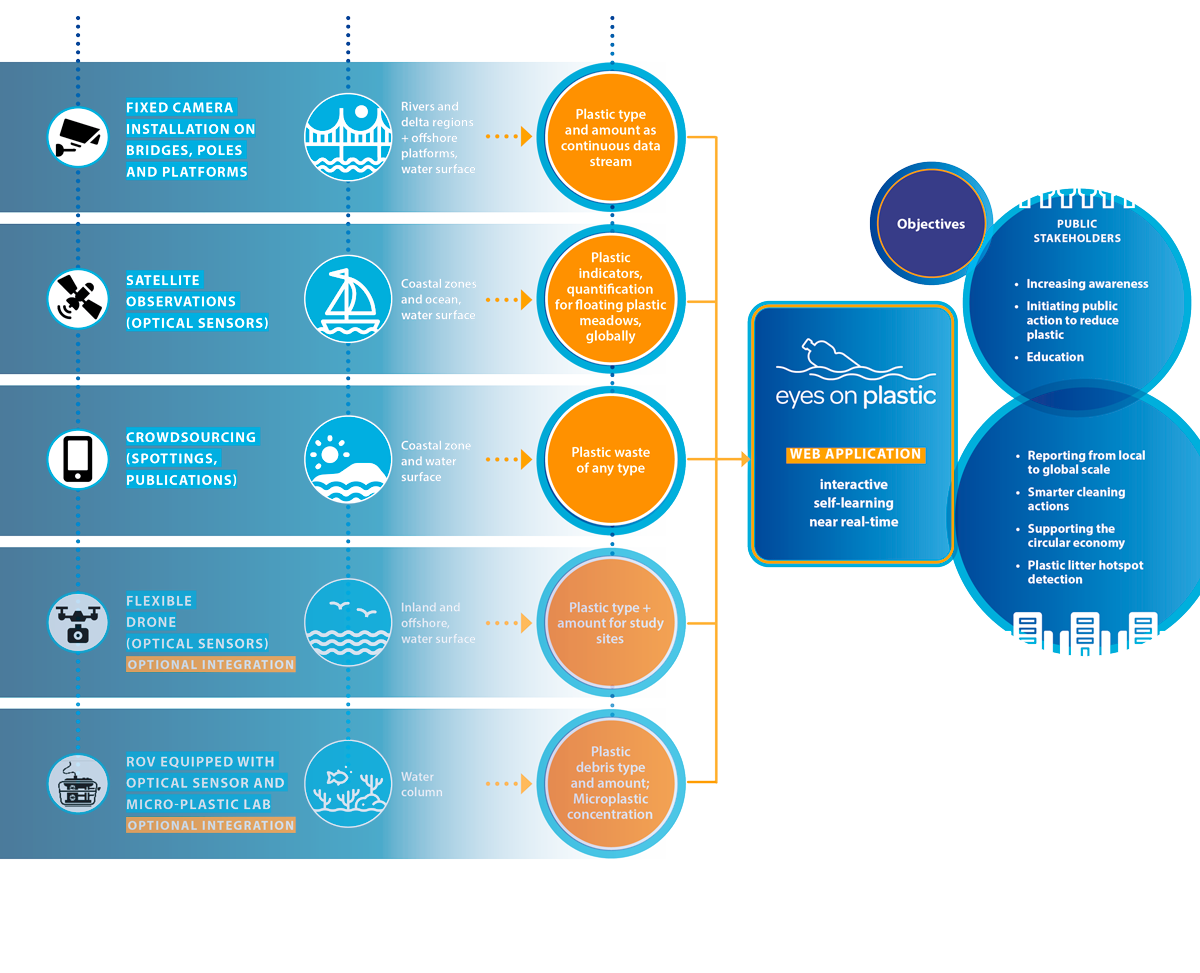

A holistic plastic monitoring approach

In order to detect plastics in rivers around the world, using synergies is key. Therefore, we combine multiple components – CCTVs, satellite observation, citizen science, drones and underwater cameras – for a smart monitoring solution.

Data sources

Surveillance cameras – CCTV

Surveillance cameras provide real-time, extremely high-resolution images 24/7. We access them to apply Artificial Intelligence (AI) solutions to identify floating debris over time. Therefore, we are using an object recognition techniques (YOLO-5), which we have trained for oblique camera records of CCTV cameras to identify floating debris and plastic.

The cameras are observing river transects and coastal zones. They complement the satellite data by on-site images and are automatically ingested into the App. The convolutional neural network (CNN) that we use for floating debris and plastic object detection was trained with data collected in Indonesia, Italy, Bavaria and Brazil. For the data collection, we used freely available CCTV cameras on bridge poles, but also mobile phones or sport cameras.

Satellite Observation

Satellite data enable a synoptic view of aquatic environments in space and time. They allow to locate and quantify water quality phenomena, such as algal blooms, turbidity and sediment loads. In Eyes on Plastic, satellite-derived turbidity measurements serve as a proximity of plastic in the water column. EOMAP’s verified Earth observation Workflow System (EWS) and the physics-based pre-processing and analytics (Modular Inversion Program MIP) are applied to routinely analyse Sentinel-2 satellite data.

In addition, satellite-based data can help to identify floating debris patches.

For this purpose, high-resolution spectral sensors on satellites are used. They allow to classify and later quantify plastic component meadows in the floating debris on the water surface of rivers and coastal zones.

Crowdsourcing

The public is a key and integrated part of the technological concept.

People from all over the world are invited to upload occurences of plastic waste. This can cover plastic waste they have spotted, but also or cleaning activities. Automated geo-positioning while uploading a new photo ensures smooth usability. The crowdsourcing approach in return will have a positive recurring effect on the automated plastic detection applied on the fixed (CCTV) cameras: The images uploaded will be used to train and improve the AI algorithms.

Citizens are also welcome to upload links to relevant publications from research and mass media. This concept offers manifold citizen science opportunities to share knowledge and experience.

Drones

The very high resolution imagery from drones are highly valuable to identify plastic objects (>macro-size), and raised our thoughts on the business aspect of this. In the future, we will consider using drone data (RGB) data from stakeholders which we can use to provide the technical feasibility.

This is why drones – so far – are included as an optional source.

Underwater Camera / ROV

Plastic litter is not floating on the water surface. Therefore, Eyes on Plastic also aims at underwater camera data analytics. Similarly to camera systems above water, we plan to deploy an embedded optical object detection system onboard an ROV. This allows to monitor the water column for plastic litter concentration on the decimetre scale.

Still searching for reliable training data, we intend to cope with the fact that objects are often covered in dirt or biofouling.

Just like drones, ROVs are therefore shown as an optional data source.